Projects

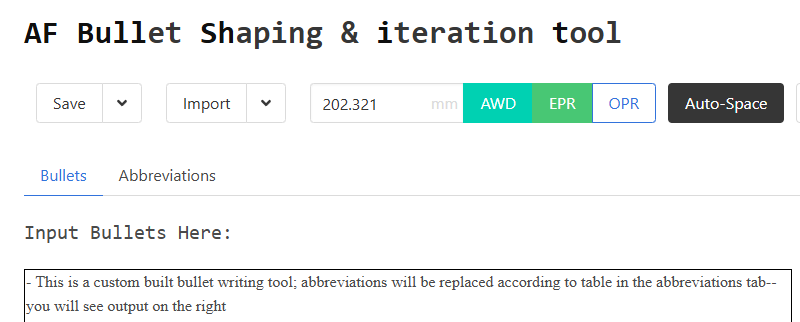

Performance Report Tool

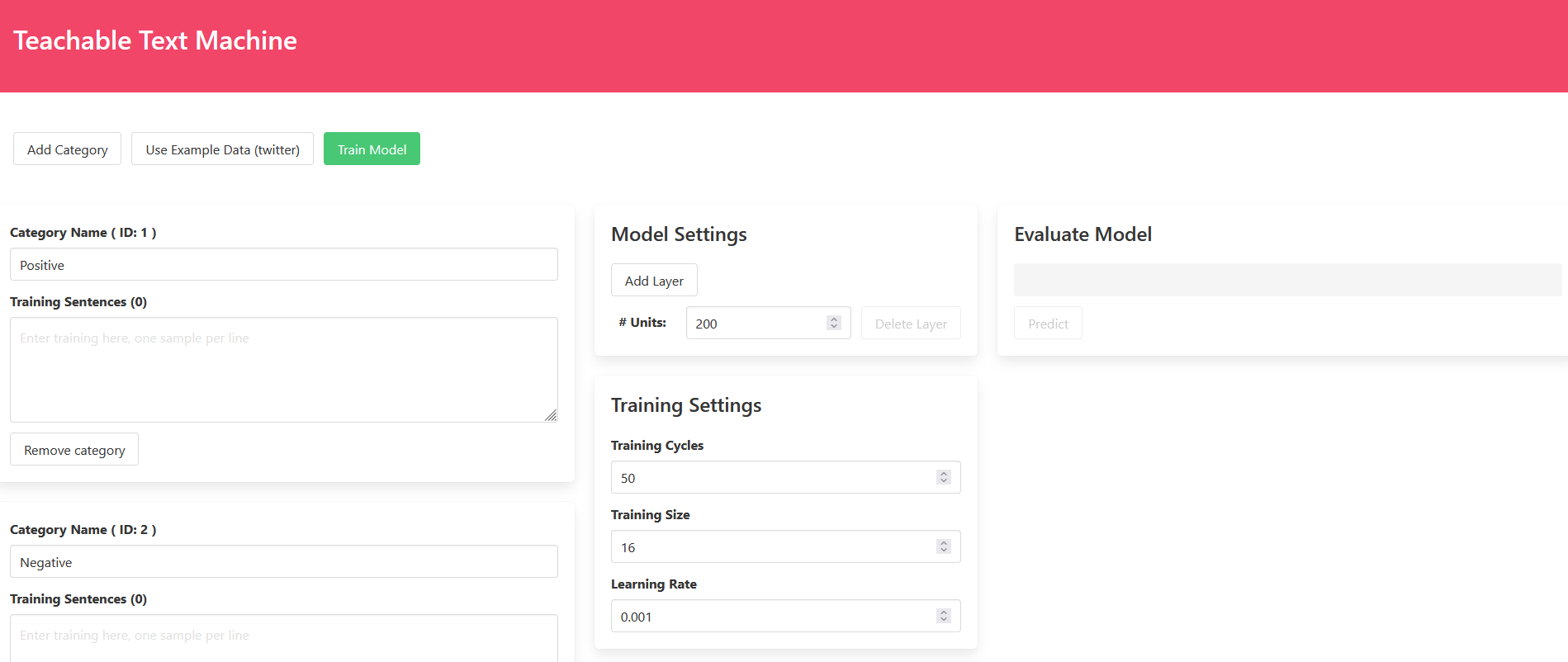

Teachable Text Machine

Home Lab

My “home lab” started with just a raspberry pi running Pihole. Then I started working with routers/switches at my IT/networking job, and ended up buying a managed switch and pfsense router for myself to mess around with at home. My IT/networking job turned into DevOps, and I started learning things like Terraform and Ansible.. at the same time, my coworker told me about a free hypervisor called Proxmox. Things quickly progressed from there, and now my current compute/networking inventory is:

Performance Statement Generator

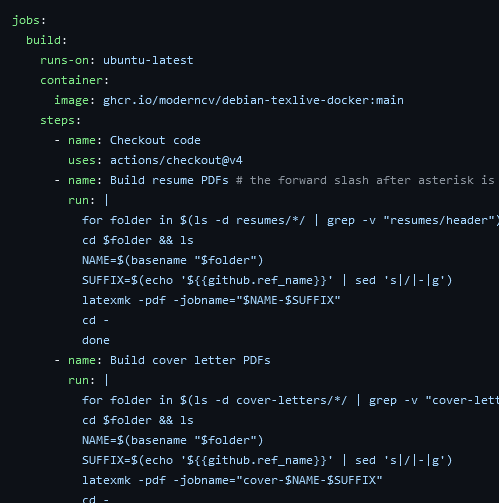

Resume Build Pipeline

Web Product Scraper

Retail Arbitrage is a way you can make money by being a middle-man. You can look for and buy cheap products at brick-and-mortar stores in bulk and then sell them on other marketplaces like Amazon, where there is always demand/sales volume. My wife does retail arbitrage, and wanted to expand into online arbitrage, which is the same thing but buying from online stores instead. She came to me for some help to be able to do this more efficiently.

Dialogue Summarizer with Tuned FLAN-T5 Model

Recently, I had the opportunity to take the “Generative AI with Large Language Models (LLMs)” course. As part of the course, I got the opportunity to create several fine-tuned LLMs using Amazon SageMaker. Overall I thought this course was great for me, a person with a basic machine learning background (took Andrew Ng’s machine learning course on coursera back in 2012, and have done a few personal projects with neural networks) and have experience coding in Python. The course did a good job of explaining the techical concepts behind LLMs without getting too bogged down in the weeds. The hands on activities were great, with a good integration with lab accounts in AWS to actually run notebooks and things. The purpose of this post is to document a few key things that I learned regarding building fine-tuned LLMs based on foundational models.